What is B2B A/B testing? - an HBR study

Table of Contents

A/B testing should be helping you increase conversion rates, decrease your ad spend and make better design decisions on your website. However, there is a lot of room for failure for B2B marketers when optimizing their content through B2B A/B testing.

What is B2B A/B testing?

A/B testing means creating two (or more) versions of the same piece of content and delivering that proportionally to your audience. Once both of the versions have been shown to a big enough audience, you can see from the analytics data which has increased your KPIs the most.

A classic for A/B testing is this Harvard Business Review article “The Surprising Power of Online Experiments” that tells us the business case of Microsoft and other major tech giants that have gained huge gains from A/B testing. The article states: “Controlled experiments can transform decision making into a scientific, evidence-driven process—rather than an intuitive reaction.”

Common use cases for A/B testing include landing page design and ads. Almost everything is testable: you can test big and small design changes and content, too. Common A/B testing tools for websites include Google Optimize, VWO, and Optimizely. Most of the ad platforms support A/B testing out-of-the-box, too. With Google Optimize, you can start A/B testing your website for free.

The problem with data

The theory behind A/B testing is solid. If we can learn that a version works better than another, why shouldn’t we do it, then? Isn’t that what everyone should be doing? Let’s A/B test the hell out of everything and show our customers only the best versions!

For most of the B2B companies and marketers out there, that’s not so simple. One problem is that A/B testing needs a lot of data. A LOT. You should probably have at least a thousand conversions before drawing any conclusions based on your data. For each version, for every test. How many conversions do you get monthly? Less than a thousand? Less than a hundred? Less than 10?

The HBR article I cited earlier tells us that [Any company that has at least a few thousand daily active users can conduct these tests.” Let’s say we have “a few thousand daily active users”. But are these similar users? Is the value of these users the same? When dealing with businesses, that’s not usually the case.

B2B customers are widely different from each other

Let’s say you are testing your blog titles. You find a version that seems to be working great and it shows a major increase in all major metrics: social media shares, clicks, backlinks, email subscriptions and all seem to be doing great.

But what if the new title version works well only for people with no buying intention? What if the new title works well for students googling for their homework but not very well for your current major customers who you’d like to engage with? What if the new ads work well for getting quote requests – from the wrong kind of customers? What if you do get subscriptions for your SaaS product with the new landing page – but they cancel soon after you’ve spent a lot of time and effort onboarding them?

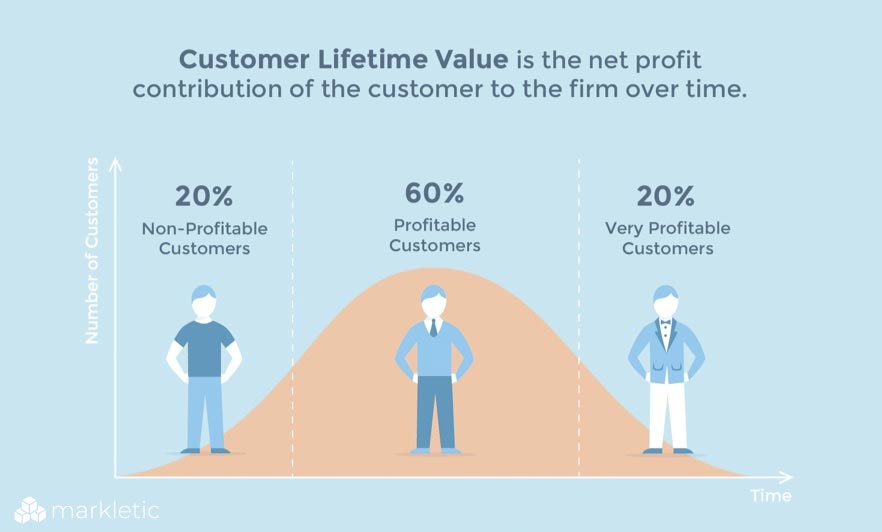

The problem is that A/B testing is testing the top of the funnel but in B2B business we want to be optimizing the bottom of the funnel. We don’t optimize for clicks or conversions, we optimize for customer lifetime value.

A great growth hacking framework that focuses on the customer lifetime value is the AARRR framework.

Getting numbers is easy, getting numbers that help is hard

“But I don’t need that many conversions to see results!”, you might say. “My A/B testing tools show me a green tick and a text ‘95% probability’. I should be fine, right?”.

Unfortunately, no.

In fact, whatever you test, most of the time you’ll get the statistical “95% probability” at some point in the test.

Mats Einarsen did something called A/A test to demonstrate this. He ran a simulation of two similar versions tested against each other. Clearly, there shouldn’t be any difference between two similar pages. However, when he ran the simulation a thousand times, each with 200.000 participants (notice the amount of data, see the previous point) 531 a confidence rate of 95% was reached at some point in the test. If the test had been stopped after seeing that magical “95% confidence” for the first time, over half of the time the result would have been wrong.

The only way to make sure your test is not susceptible to this kind of error is to define the sample size before the test. So that’s a thousand conversions for each of your tests and each version.

Collecting that kind of data takes some time, even with a good amount of traffic. It also takes a lot of your teams’ effort. The time, by the way, could be spent creating new content or making improvements to navigation, but what if this skews the results somehow?

When the A/B testing works

Another issue with A/B testing for B2B is that even if you could overcome all these problems related to the sample size, the gains you would expect are not going to be very major. Typically they are in the range of single digits in the top of the funnel, where the optimization happens. What would be the business benefit of you getting 5% more website visitors? How many euros a year in profit?

Interestingly again in the HBR article, it says that “every 100-millisecond difference in performance had a 0.6% impact on revenue”. Is your A/B testing tool faster than 100 milliseconds? Google uses website speed as a ranking factor, too. Is your A/B testing tool affecting your rankings? What’s the cost of this against not running any tests?

Then how to optimize for B2B audiences?

But if B2B A/B testing is so hard, what can a B2B marketer do? It doesn’t have to be “intuitive reaction” as in the HBR article. I would suggest a more qualitative approach.

The first step would be to try and gain a better understanding of your target groups and their needs. In the best case, you can use interviews, observation, and other research. In the worst case, you can do a small workshop with your team. Go through some of your customers and try to group them and give the groups common denominators, for example. What could you offer to these stereotypes? What are they looking for?

With this new information, what kind of new content you could produce? Are some of these target groups underrepresented in your current marketing mix? Can you find new PR opportunities through these new target groups?

Second, your website is probably currently not very good at telling what your company does and what are the benefits of selecting your product/service.

Why do I think so? Because I rarely see a B2B company website that would be particularly good in this regard. Try clarifying your front page, I’m sure the benefits of half a days job will be greater than what you can achieve in half a year of testing.

Third – and this is the most important part – do more. There’s no shortcut to success in here, either. Go and create new ad groups, try and find a new target group for your ads. Send PR outreach emails like there’s no tomorrow and do link building more systematically than you ever have. Participate in industry events or even better - try and get to speak there.

A/B testing is often done because it’s easy. If you can report 20% increases in something to your boss, why not do it? It only costs 399$ a month and a few hours of work. But if something sounds too good, it probably is. A/B testing is not always worth it for a B2B marketer but hard work always is.